November 30, 2023

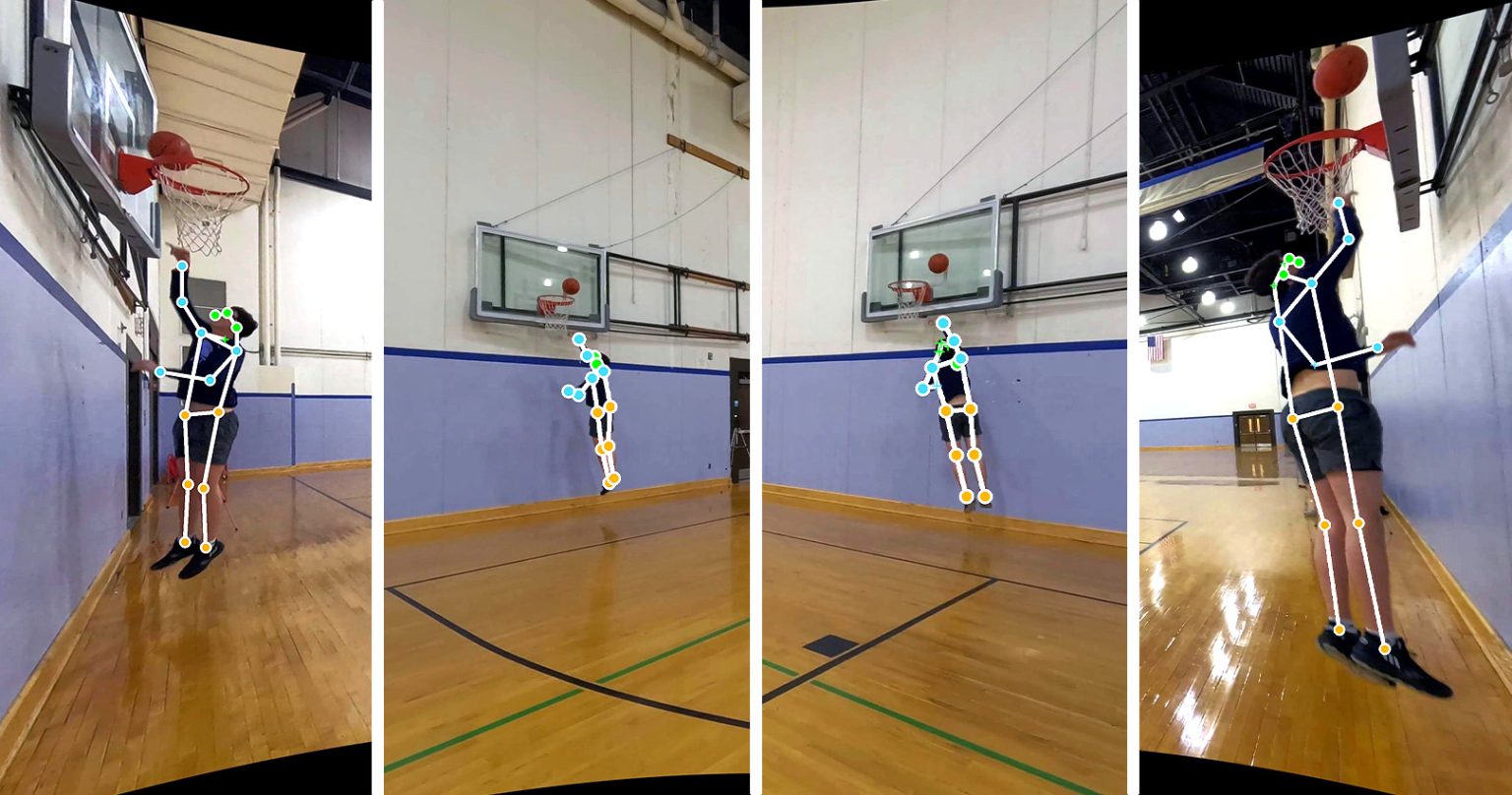

Above: composite image of simultaneous captures from all four cameras

UNC is transforming AI’s future with a human-centric approach in the Ego-Exo4D project led by an international consortium of 14 universities in partnership with Meta AI.

UNC Computer Science is excited to announce its participation in the Ego-Exo4D project, an innovative venture that seeks to revolutionize AI. In a collaboration led by an international consortium of 14 universities in partnership with Meta Fundamental Artificial Intelligence Research (FAIR) team, UNC joins forces to create a first-of-its-kind, large-scale, multimodal, multiview dataset that enhances AI’s perception, responsiveness, and understanding of human skill in real-world settings.

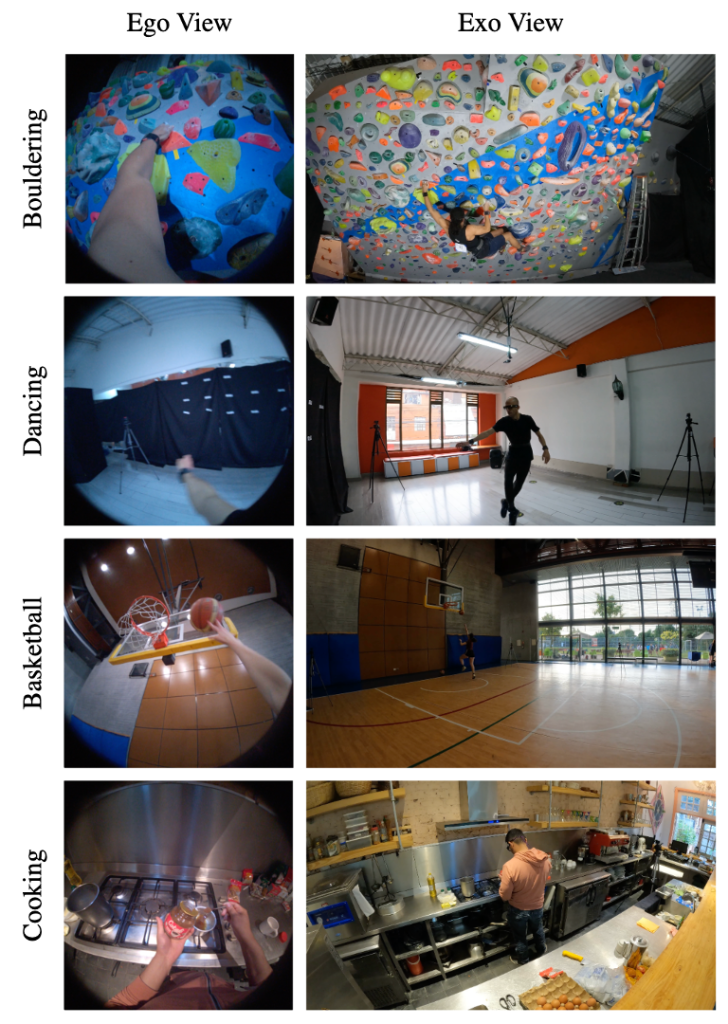

Imagine you are a basketball player wearing a camera that records everything exactly as you see it, capturing your experiences from your point of view — an “egocentric” view. Now, picture additional cameras placed around you, capturing your actions from various angles. These provide “exocentric” views, similar to playing a character in a video game. The Ego-Exo4D project combines these two perspectives to teach AI systems to perceive the world more like you do. Additionally, it collects expert analysis of your activity, resulting in data that not only consists of play-by-play observations on how you shoot a three-point shot, but also how you can improve your body positioning to land the three-point shot consistently.

Dr. Gedas Bertasius, assistant professor in the Department of Computer Science and UNC’s research lead on the project, explains the significance of this project: “Ego-Exo4D isn’t just about collecting data, it’s about changing how AI understands, perceives, and learns. With human-centric learning and perspective, AI can become more helpful in our daily lives, assisting us in ways we’ve only imagined.”

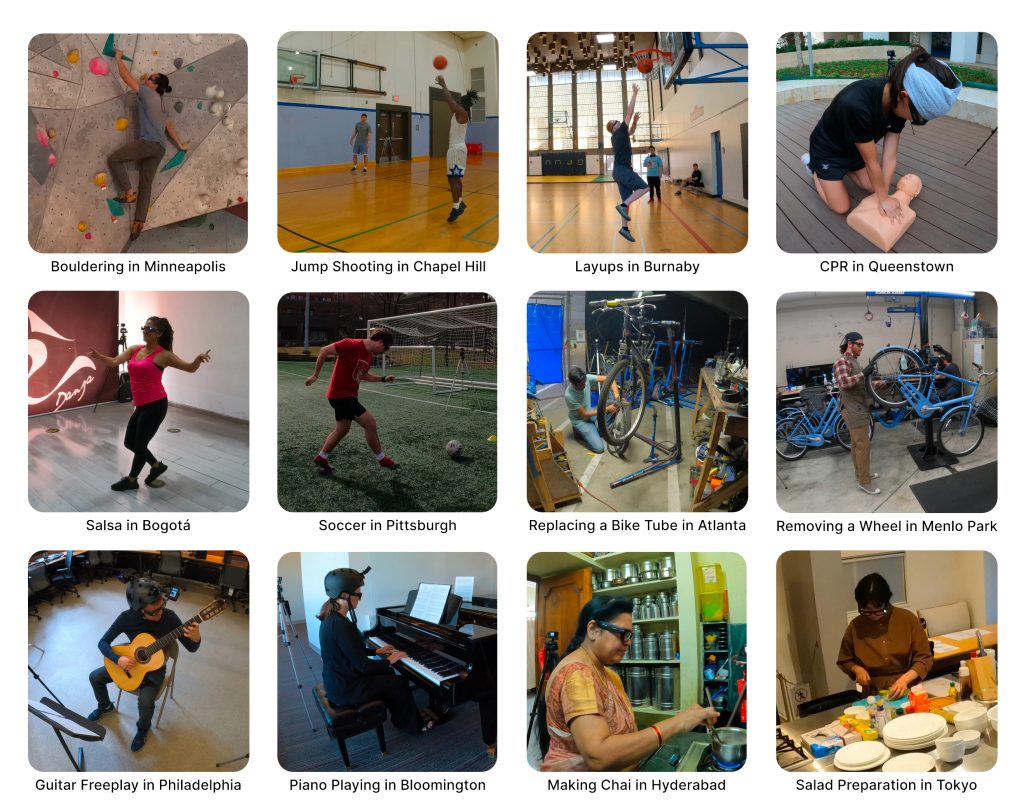

The project’s approach represents a significant departure from traditional AI learning methodologies. Current AI systems primarily learn from static, third-person images and videos – akin to a bystander’s view. What makes Ego-Exo4D’s approach unique is the multi-modal combination of first-person experiences from both the ego and exo viewpoint with feedback and insight from skilled experts. The project focuses on skilled human activities, including sports, music, dance, and more, from more than 800 unique participants spanning 13 cities worldwide.

The combined approach allows AI to process and understand complex human activities in a way that’s closer to our natural perception and cognition, with the added ability to detect and understand the nuances of skill mastery. Bertasius adds, “By matching the data view with human skill and expertise, AI is not only learning complex tasks, but we are training it on how to train others with the perception of skill level.This has a lot of interesting applications from personalized AI coaching to skill evaluation.”

At its core, the Ego-Exo4D project is committed to ethical integrity and collaborative innovation. All resources, including over 1,400 hours of video data, are open-sourced, inviting the global research community to explore and expand upon this work. The project’s adherence to privacy and ethical standards ensures that this research not only advances AI, but does so responsibly and inclusively.

By training AI from a multi-modal perspective, UNC is paving the way for more intuitive and responsive AI systems. “The potential applications are vast,” Bertasius states. “What I hope most for is increasing access — so that someone who is interested in learning new skills (or improving) like basketball, dance, or music, can learn more effectively without the high cost of personalized instruction.”

In addition to Bertasius, the team at UNC includes doctoral students Md Mohaiminul Islam and Feng Cheng and undergraduate computer science majors Wei Shan, Jeff Zhuo, and Oluwatumininu Oguntola.

To learn more about the project and UNC’s contributions, visit the Meta blog or the Ego-Exo4D website.