August 20, 2020

Newly tenured Parker Associate Professor Mohit Bansal has been working for years on research into natural language processing (NLP), computer vision, and machine learning, areas of computer science which seek to help computers process and understand the world as competently as humans do. Early into UNC-Chapel Hill’s sixth month of remote operation, the work of Bansal and his lab has future applications in situations where people are isolated from each other. His research project funded by a U.S. Army Research Office (ARO) Young Investigator Program (YIP) Award is one such example.

Bansal, holder of the John R. and Louise S. Parker distinguished professorship, was recognized in 2018 with the ARO’s YIP Award to development advanced machine learning and natural language processing models that can assimilate and interpretably reason over large-scale, uncertain information from several diverse modalities like text, images, video, in order to automatically direct actions of computer systems and autonomous robots. The project focuses on next-generation multimodal fusion networks for advanced grounding and actionable tasks and will have potential applications in a variety of areas, from homes and schools to warehouses and fire stations.

The first major area of Bansal’s work related to this ARO-YIP award centers around video and text captioning, reasoning, localization, retrieval, and question answering models. Bansal’s lab and collaborators have developed large-scale datasets as well as advanced algorithms to analyze both video and text in a way that allows a machine to answer questions in free-form natural language (english) about a video by identifying and locating objects and activities within the video. His students have improved these models via dense sub-regional descriptions and have also developed novel large-scale vision-language cross-modal representations, plus many-modal question answering tasks on images, tables, and text. Based on this line of work’s extensions, in a time of social distancing, students can learn from home with automated multimodal tutors that can assist students as they study and help find answers to their questions, without the need to manually pore over hours of classroom lecture video. These algorithms can even help isolated people with physical impairments to navigate and interact with the world by identifying, analyzing, and answering questions about the user’s environment.

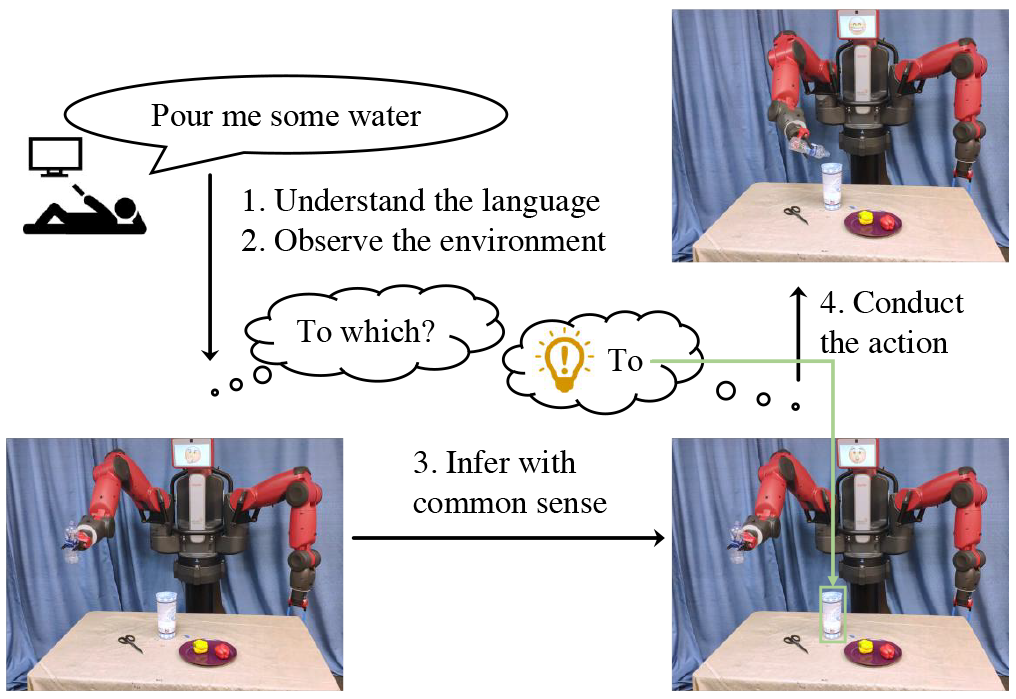

The second major area of the project involves remote autonomous navigation and action execution. These algorithms enable and enhance human-directed autonomous operation of robots in scenarios where an environment is unsafe or remote. In the event of a building fire or a dangerous virus outbreak, for example, a robot could be sent into a building to survey and search for people, animals, and objects. A human operator can watch video footage streamed by the robot in real time and give verbal instructions that reference objects and events in the video, just as if he or she were speaking to another human. The robot can then act by processing the instructions, detecting objects and obstacles in the environment, and planning its motions accordingly. Generalizability research by Bansal and his students allows these robots to follow instructions in unseen rooms and environments. Additionally, Bansal and his collaborators’ algorithms incorporate common sense to allow the robot to automatically understand incomplete or ambiguous instructions. If the robot receives an instruction like “pour some water,” it will automatically determine that it should pour water from the nearby pitcher into the empty cup on a table with several other objects. In the case of a quarantined person in need of a caretaker, a remote human operator could ask an in-home caretaker robot to perform household tasks.

“The role that robots and decision aides, including conversational agents, play in society will only increase in the future.” said Dr. Purush Iyer, an ARO program manager. “Humans and robots need to have a shared mental model that can facilitate communication, by providing context for disambiguation, as they cooperate on a mission in a seamless manner. Dr. Bansal’s work provides the foundational basis that would make human-agent teaming, of the future, possible.”

Bansal’s ARO-funded research is rapidly changing our understanding of what computers can accomplish when collecting different modalities of information, and its generalizability can make the research impactful beyond the applications imagined at the outset of the project. The work undertaken by Bansal alongside his students and collaborators may also one day potentially play a part in helping us navigate the isolation brought on by a global pandemic.

“Automated understanding of text, video, and speech is essential for the next generation of computer interfaces, robotics, and digital assistants and Mohit is doing pioneering research towards these ends,” said Kevin Jeffay, chair of the UNC Department of Computer Science. “It’s going to be an exciting future.”

Bansal is the director of the Multimodal Understanding, Reasoning, and Generation for Language MURGe-Lab (part of the UNC-NLP Group) at UNC-Chapel Hill. In addition to the ARO YIP Award described above, he has also received the Defense Advanced Research Projects Agency (DARPA) Young Faculty Award and Director’s Fellowship to fund his research into life-long NLP models that can self-learn their multi-task architecture and policies via multi-armed bandit and controller methods, and continuously adapt over time. His group has also developed state-of-the-art, widely-used methods for automatic document summarization, stylistic and multimodal dialogue models, natural language inference, fact verification, interpretable multi-hop question answering, and adversarial robustness. He is also a recipient of the 2020 IJCAI Early CAREER Spotlight, 2019 Microsoft Investigator Fellowship, 2019 NSF CAREER Award. His students have received the Microsoft Research Ph.D. Fellowship, Bloomberg Data Science Ph.D. Fellowship, NSF Graduate Research Fellowships, as well as CRA Outstanding Undergraduate Researcher Awards.

For more information, visit cs.unc.edu/~mbansal, murgelab.cs.unc.edu, and nlp.cs.unc.edu/.

ARO is an element of the U.S. Army Combat Capabilities Development Command’s Army Research Laboratory.