June 5, 2015

New Process Constructs 12,903 3D Models from 100 Million Crowd-Sourced Photos

June 5, 2015

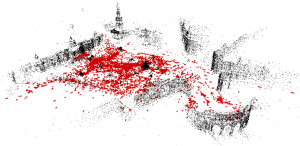

The red dots in this image represent locations which have been reconstructed as 3D models.

CHAPEL HILL, NORTH CAROLINA—The UNC Chapel Hill (UNC-CH) Department of Computer Science and URC Ventures have built a 3D reconstruction of the world’s landmarks using computer vision and 3D modeling techniques. Using Yahoo’s publically available collection of 100 million crowd-sourced photos and a single PC, UNC-CH and URCV created a new software process able to rebuild 12,903 3D models in six days. A demonstration will be presented during the 2015 CVPR Computer Vision Conference in Boston, Massachusetts.

Jared Heinly, Johannes L. Schönberger, Enrique Dunn and Jan-Michael Frahm of UNC-CH’s 3D Computer Vision Group have created software that processes world-scale datasets to create 3D models of locations all over the world. Unlike maps and aerial images, these models can be directly used for VR applications such as virtual tourism.

Previous projects have built 3D models of landmarks from entire cities based on datasets of up to a few million images, but reconstructing the 3D models of the landmarks of the entire world requires the ability to process orders of magnitude more data.

The focus of the new framework is to enable processing on datasets of arbitrary size. It streams each image consecutively, assigning it to a cluster of related images. The streaming process provides for greater scalability by analyzing each image only once. The key of the new algorithm is to efficiently decide which images to remember and which to discard as their information is already represented.

After the data association process is complete, sparse 3D models are built using the images. This process takes less than a day, bringing the total process to slightly more than five days for 100 million images.To test this method, researchers applied the framework to Yahoo’s publicly available collection of 100 million crowd-sourced photos, containing images geographically distributed throughout the entire world. The program took 4.4 days to stream and cluster the entire 14-terabyte dataset on a single computer before building the 3D models of each of those sites. Stacked on top of each other, these photos would reach into the middle of the stratosphere of the earth (twice as high as airplane cruising altitude).

A 3D rendering of the Pantheon using URCV’s reconstruction process

A 3D rendering of the Pantheon using URCV’s reconstruction processUNC-CH partnered with URCV to reconstruct the data in ultra-high resolution, creative commons licensed images. URCV used the algorithm’s output to construct the 3D models via world-scale stereo modeling technology. Model results are based on URCV’s novel accuracy-driven view selection for precision scene reconstruction. To further improve the realism of the 3D scene models, a robust consensus-based depth map fusion is leveraged, along with an appearance correction. The world-scale stereo leverages a scalable, efficient, multi-threaded implementation for faster modeling.

The UNC-CH research group is planning an open-source release of the streaming software to the research community later this year.

The UNC-CH research material is based in part upon work supported by the National Science Foundation under Grant No. IIS-1252921, No. IIS-1349074 and No. CNS-1405847, as well as by the US Army Research, Development and Engineering Command Grant No. 911NF-14-1-0438.